Introduction

Robot localization is the process of determining where a mobile robot is located with respect to its environment. In this lab, I used a simulation software to implement a probabilistic method to localize a mobile robot. This is in contrast to the previous lab which used a non-probabilistic method.

Lab Tasks

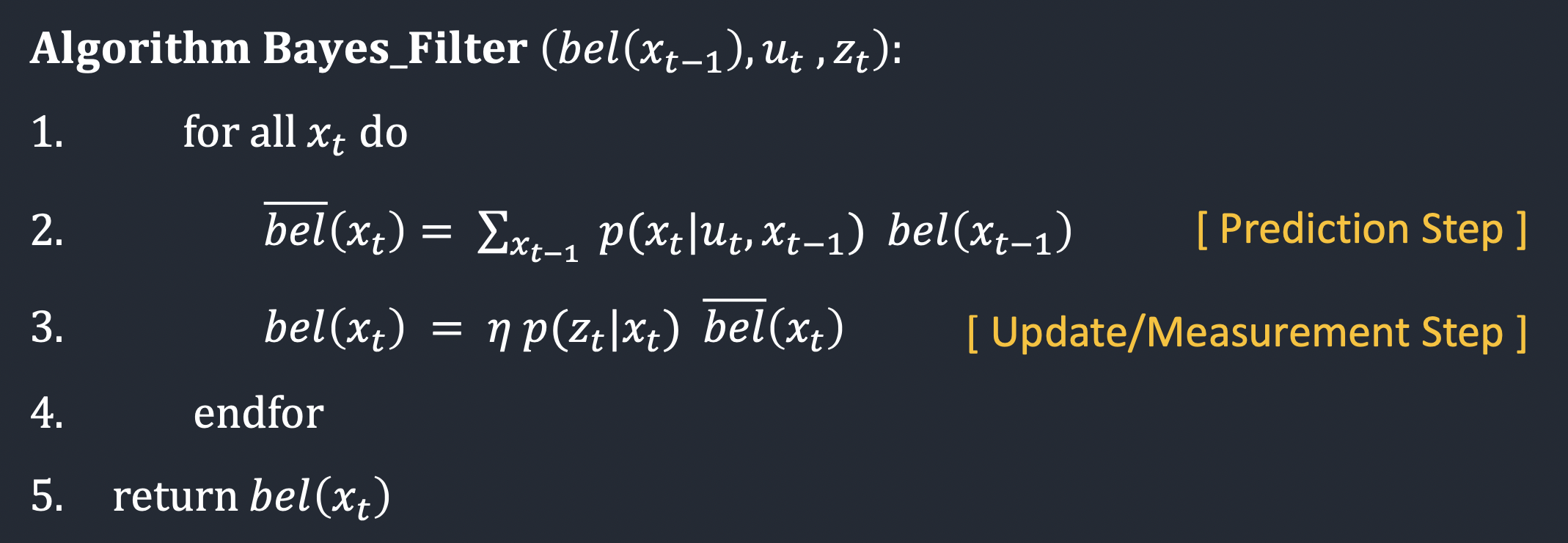

First, I will briefly go over the theory behind Bayes filter. Here is the Bayes filter algorithm:

The Bayes filter takes 3 inputs: (1) the belief distribution of the robot's previous pose (i.e. state) (2) control input during the current time step (3) sensor measurements made during the current time step. A belief is essentially one's "best guess" at something. For the purpose of localization, the belief about the robot's pose is basically the "best guess" at where the robot is within the map. The algorithm loops over every pose that the robot could possibly be in and calculates the probability of the robot being in each of those poses. It does this through two sub-steps: prediction and update.

The prediction step takes the previous belief distribution and the control input for the current time step. That is, given the belief distribution of the robot's pose in the previous step and the input that is given to the robot, it calculates the probability of the new belief distribution of the robot's current pose.

The update step then incoporates the information from the sensor measurements made during the current time step and updates the belief distribution accordingly.

In our actual implementation of the Bayes filter, I divided these steps even further. I defined helper functions compute_control, odom_motion_model. I also modeled the sensor as a Gaussian. Here is the code for the helper functions just mentioned, the prediction step, and the update step:

Note that this code was written by Anya Probowo who took this course last year.

Testing the Bayes Filter

I tested the Bayes filter algorithm implemented above on a pre-planned trajectory. Here is a video of the results:

The green trajectory is the ground truth (where the robot actually is), blue trajectory is the trajectory according to the belief distribution (where the robot thinks it is) and the red trajectory is based on solely the odometry data. As you can see, the belief trajectory using Bayes filter is significantly more accurate than using the odometry data alone.